Opensource SW #06 | Machien Learning

2022년 11월 26일 19:13

Introduce ML

📌 Machine Learning

기계 학습(ML)은 경험과 데이터를 통해 자동으로 향상되는 컴퓨터 알고리즘을 연구하는 학문이다. 좀 더 구체적으로는 컴퓨터 시스템이 패턴과 추론에 의존하여 명시적 지시 없이 태스크를 수행하는 데 사용하는 알고리즘과 통계 모델을 개발하는 과학이다.

ML은 인공지능(AI)의 하위 분야로 간주될 수 있다.

📌 Feature

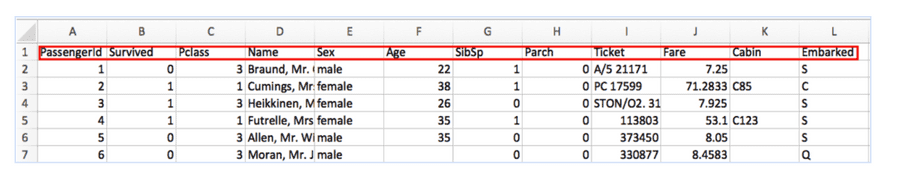

머신러닝 모델들은 Feature라는 값들로 이루어진 데이터를 입력받는데 Feature란, 데이터를 설명하는 각 속성을 의미하며, 사람이 아닌 기계가 충분히 이해할 수 있는 형식의 데이터 들을 의미한다.

예를들어 아래처럼 input 데이터들을 넣어줘야지 ML이 이해 가능하다. 각 column(속성)들 (Name, Age, Ticket 컬럼 등등) 이 바로 Featue 다.

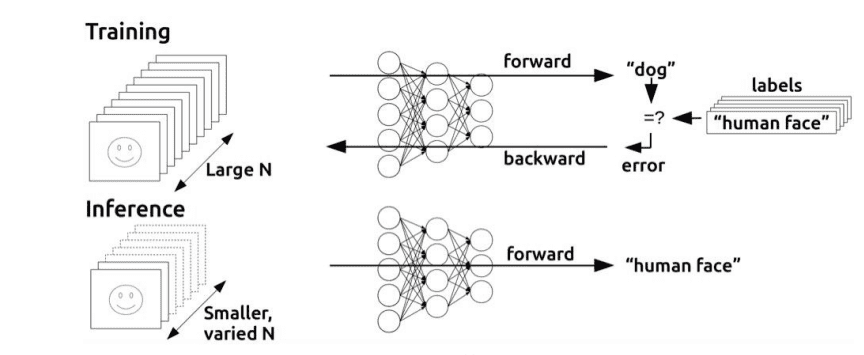

📌 Traning & Interface

ML의 외부적인 일은 크게 2가지 과정으로 나뉜다.

- Training(학습 과정) : ML 모델에서 계속 파라미터 데이터를 주입해주면서, 답을 정확히 도출해내도록 훈련을 시키는 방식.

- Interface(추론 과정) : 앞서 훈련된 모델을 기반으로 ML이 알아서 보이지 않는 데이터 결과값을 예측 및 도출해내는 것 (Ex. 결과값으로 강아지임을 판별해내는 것)

Training 과정을 통해 ML에게 충분히 학습을 시켰다면, 이후 서비스로 출시하는 과정이 바로 Interface 과정이다. 즉, Inteface는 ML 이 충분히 학습한 이후 사람들이 원하는 데이터를 충분히 추론해서 결과값으로 리턴해주는 과정이다.

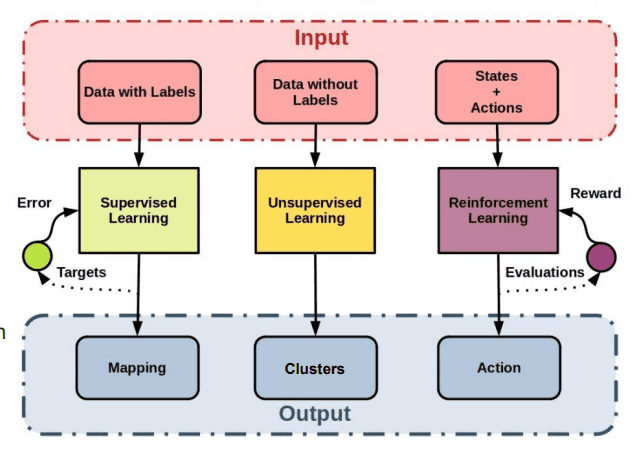

📌 ML의 내부 Tasks

ML내부에서의 Task는 크게 3개의 카테고리로 분류된다.

- ¹Supervised Learning (지도 학습)

- ²Unsupervised Learning (비지도 학습)

- ³Reinforcement Learning (강화 학습)

Label은 각 데이터를 인풋으로 넣었을 때 도출되는 각 예측값( = 정답 = 결과값)을 말한다.

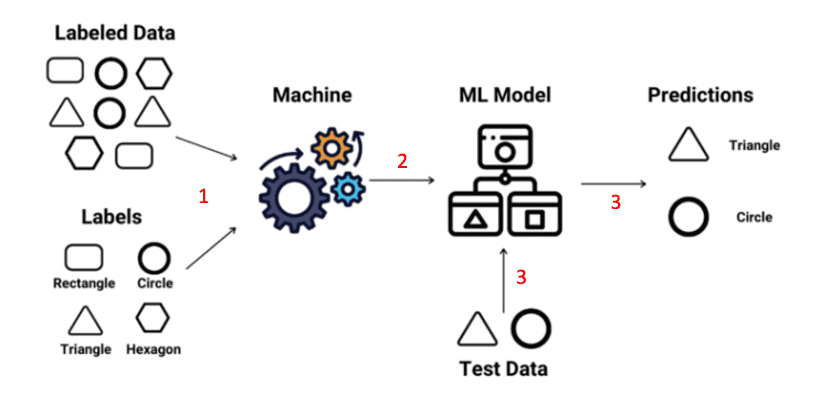

📌 ¹Supervised Learning

- 삼각형 이미지 데이터(Labled Data)를 넣어주면서 동시에 “이건 삼각형이라는 타입(Label) 이라는거야!”라고 하며 Label정보도 동시에 기계에다 인풋을 넣어준다.

- ML model 은 이것이 삼각형인지, 동그라미인지, 사각형인지등의 결과값을 예측해서 도출해내는 것을 계속 학습한다.

- Test Data를 넣었을 때, ML model 이 도출해낸 결과값을 보고 사람은 이것이 틀린것인지 맞은것인지 ML model 에게 알려준다. 그러면 ML model 은 계속 올바른 정답을 찾아내도록 계속 인간으로부터 학습하게 된다.

최종적으로 ML은 주어진 인풋에 대해서 올바른 결과값을 도출해낼때까지 학습을 마쳤다면 해당 ML model은 서비스에 활용이 될 수 있는 것이다.

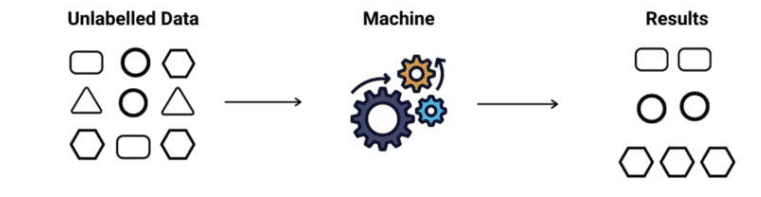

📌 ²Unsupervised learning

지도학습과 차이점을 비교해보면

- 지도학습의 Input : 데이터 + Label + 학습목표(training objectives)

- 비지도학습의 Input : 데이터 + 학습목표(training objectives)

전형적인 비지도학습 방식은 dimensionality reduction 과 clustering 라는 과정을 포함한다.

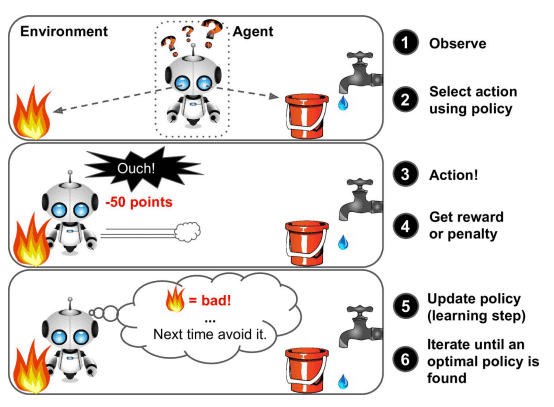

📌 ³Reinforcement Learning

다양한 행동과 시행착오를 걲으면서 환경에 대해 경험(experience)를 쌓아나가면서 학습해 나가는 방식이다.

scikit-learn

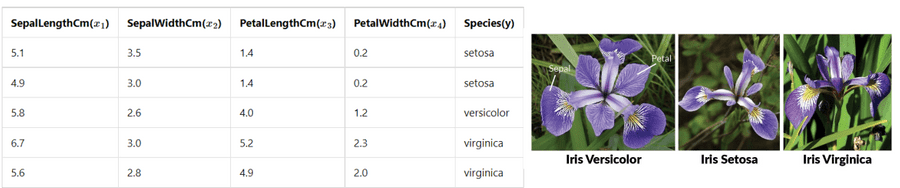

📌 Iris dataset으로 시작하기

여러가지 dataset 중에서 Iris dataset에 대해 Classification을 진행해보자.

Iris dataset은 4가지의 feature(SepalLengthCm, SepalWidthCm, PetalLengthCm, PetalWidthCm)를 보유하고, 3가지의 클래스(versicolor, setosa, virginica)를 가진다.

from sklearn.datasets import load_iris

dataset = load_iris()sklearn.datasets 에 정의되어 있는 load_iris 함수를 통해 datast 을 호출할 수 있다.

📌 Dataset Properties

print(dataset['data'].shape)

print(dataset['data'][:3])

# (150, 4)

# [[5.1 3.5 1.4 0.2]

# [4.9 3. 1.4 0.2]

# [4.7 3.2 1.3 0.2]]- Iris dataset을 포함해서 sklearn에 있는 대부분의 ML 알고리즘들은 2차원 행렬의 형태를 지닌다. 이때 행렬의 행은 sample 데이터의 개수이고, 열은 feature 의 개수이다. 즉 “(sample 데이터 개수, feature 개수)” 의 행렬 형태를 보유하고 있다.

- 150개의 sample 꽃 데이터와 4가지 종류의 feature 를 보유하고 있다.

shape: 해당 dataset 의 행렬의 사이즈를 가진 tuple형태의 변수이다. (150, 4) 는 Iris dataset가 150행 4열짜리 크기를 가진 행렬임을 의미한다.

print(dataset['target'].shape)

# (150,)

print(dataset.target)

#[0 0 0 0 0 0 0 0 0 0 ......]

print(dataset.target_names)

#['setosa' 'versicolor' 'virginica']-

target: 해당 dataset 의 모든 각 데이터들이 속해있는 label 값을 숫자로 치환되어 있다.=> 0이 출력되는 경우, 해당 데이터는 0번째 label 클래스에 대한 데이터임을 의미

target_names: 해당 dataset 의 label 종류가 저장되어 있다.

📌 Dataset Methods

-

fit(param1, param2): 입력받은 파라미터 데이터를 적절히 변환시켜서 ML model 을 학습(training) 시켜주는 함수이다. dataset을 통해 ML model을 만들 수 있다.-

지도학습의 경우, data와 label 2개의 파라미터 데이터(인풋 값)로 넘겨줘야한다.

classifier.fit(X, y) -

비지도학습의 경우, data 1개만 인풋으로 넘겨주면 된다.

clustering_model.fit(X)

-

predict(): ML model에 input을 넣으면 그에 대한 적절한 output을 생산해낸다. fit 함수를 호출해서 ML 모델을 학습이 종료된 후, ML 모델을 서비스에서 활용할때 predict 함수를 사용하면 입력한 데이터에 대한 아웃풋을 생성해준다.

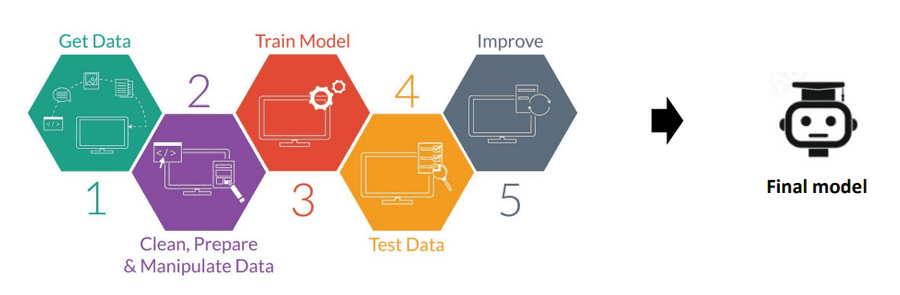

📌 전반적인 ML 의 processes 과정

- Get Data: 인간으로부터 데이터를 입력받는다.

- Clean, Prepare, Manipulate Data: 학습할 데이터(Training Data) 와 성능 평가시 사용할 데이터(Test Data) 를 분류시킨다.

- Train Model: 훈련시킨다.

- Test Data: 학습이 완료된 ML 모델을 가지고 테스트를 해본다.

- Improve: 더 향상된 ML 모델이 나오기전까지 1~3 사이의 과정들을 계속해서 반복한다.

- Final Model: 향상 과정까지 마쳤다면 최종적인 ML model 이 생성된다.

📌 Split the dataset

dataset에 존재하는 데이터들에 대해 학습할 데이터(Training Data)와 성능 평가시 사용할 데이터(Test Data)를 분류시키는 과정을 Split the dataset이라한다.

이때 test dataset 은 외부에 유출되어서는 안된다. 이때 유출된 데이터는 dara leakage 라고 부른다.

두 dataset은 비율 조정이 가능하며, 보통 training dataset의 사이즈가 test dataset의 사이즈보다 더 크다.

-

tran_test_split(X, y): Training Data와 Test Data로 분류시켜주는 함수from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(dataset.data, dataset.target, test_size=0.1) print(X_train.shape) print(X_test.shape) print(y_train.shape) print(y_test.shape) #(135, 4) #(15, 4) #(135,) #(15,)- test_size: test_size가 0.1일 때 test data의 비율이 10%이고, training data의 비율이 90%이다. (default는 0.25이다.)

📌 Transformation 과정

각 feature들의 스케일 값이 많이 차이가 날수있다.

ex) 어떤 dataset의 feature1의 data들의 값들은 0.1, 0.3, 0.6과 같은 값들인데, feature2의 data값들은 10000, 500백만 과 같이 차이가 심하면 ML이 학습하기가 곤란해진다.

따라서 각 feature들의 스케일을 맞춰주는 것이 필요하다. 이때 사용하는 함수가 StandardScaler이다.

즉 dataset을 split하고 ML에 값을 넣어주기전에 데이터를 변형시키는(스케일을 바꿔주는) 위와 같은 과정을 Transformation이라고한다.

z = (x - u) / s 식을 통해 스케일을 재조정한다.

-

StandardScaler(): feature들의 스케일을 맞춰주는 함수from sklearn.preprocessing import StandardScaler print(X_train[:3]) print(StandardScaler().fit(X_train[:3]).transform(X_train[:3])) # [[5.1 3.4 1.5 0.2] # [5.9 3.2 4.8 1.8] # [5.7 2.8 4.1 1.3]] # [[-1.37281295 1.06904497 -1.38526662 -1.3466376 ] # [ 0.98058068 0.26726124 0.93916381 1.0473848 ] # [ 0.39223227 -1.33630621 0.44610281 0.2992528 ]]fit메서드(dataset의 fit과 다르다)를 통해 평균 u과 표준편차 s를 계산하고, transform을 통해

(x - u) / s를 계산한다.

📌 Pipeline

앞서 transform 과정까지 거치며 데이터를 변형을 다 했다면, 이제 당연히 ML 모델에 변형한 데이터를 넣어서 학습시켜주면 되는데 이때 Pipeline을 사용해서 ML 모델에 쉽게 반영할 수 있다.

make_pipeline 함수를 사용해서 Pipeline객체를 생성하며,Transformation변형 과정과 test과정까지 모든 일련을 과정을 한번에 처리가 가능하다.

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import StandardScaler

pipe = make_pipeline(StandardScalaer(), RandomForestClassifier())

pipe.fit(X_train, y_train)

print(accuracy_score(pipe.predict(X_test), y_test))

# 0.9333333333333333- make_pipeline의 파라미터로 원하는 순서대로 객체를 넣어주면 된다.

StandardScaler(): 직전에 살펴봤듯이 Transformation를 해주는 객체를 반환하는 함수이다. fit과 transform호출 없이 넘기기만 하면 된다.RandomForsetClassifier(): 이 함수를 통해 반환되는 객체는 분류 ML모델 중에 하나이다.accuracy_score(predict, label): 정확도를 계산해주는 함수

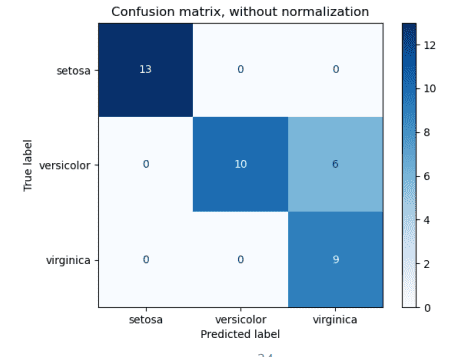

📌 Confusion matrix

전체 prediection 이 얼마나 맞았는지(정답인지를) 형렬 형태로 눈에 보기좋게 표현한 것을 혼동 행렬이라 한다.

한 축은 label 수치로, 한 축은 predict (얼마나 맞았는지)를 표현한다.

-

confusion_matrix(label, predict)from sklearn.metrics import confusion_matrix print(confusion_matrix(y_test, pipe.predict(X_test))) #[[5 0 0] # [0 7 0] # [0 1 2]]

📌 성능 개선을 위한 hyperparameters

대부분의 ML 모델에는 적합 모델의 최종 성능에 영향을 미치는 많은 매개 변수가 있다. RandomForestClassifier만 해도 매우 많은 매개 변수가 있는데, 이렇게 성능에 영향을 주는 사람이 직접 값을 지정해줘야하는 값들을 hyperparameters라고한다.

이 hyperparameters를 찾아내는 방법 중 가장 쉬운 방법인 randomized search은 특정 범위를 지정해주고 찾게하도록 하는 방식으로 2가지 옵션이 있다. [nextimators, maxdepth]

-

RandomizedSearchCV(): 주어진 범위 내에서 Random 하게 값을 하나 뽑아서 지정된 횟수만큼만 시도해보는 함수로 max_depth에 지정된 구간안에서 RandomForestClassifier에게 학습 시킨다.from sklearn.model_selection import RandomizedSearchCV from scipy.stats import randint param_dists = {'n_estimators': randint(1, 5), 'max_depth': randint(5, 10)} search = RandomizedSearchCV(estimator = RandomForestClassifier(), n_iter = 5, param_distributions=param_dists) search.fit(X_train, y_train) search.best_params_ search.score(X_text, y_test)n_iter는 총 반복 횟수이다.

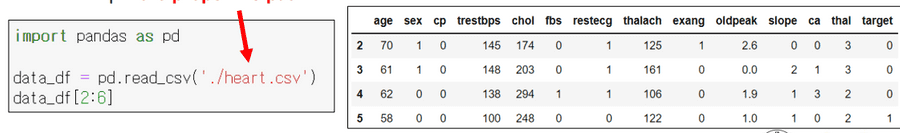

📌 외부 csv로 학습시키기

<data의 종류 2가지>

- Structured Data (정형 데이터) : table 형태로써 표현될 수 있는 데이터 ex) 타임라인 데이터

- UnStructured Data (비정형 데이터) : table 형태로써 표현될 수 없는 데이터 ex) 이미지, 비디오, 도큐먼트, 오디오 등등

ML 알고리즘은 structured data를 분석하는데 좋다. 즉 테이블 형태의 데이터를 분석하는데 좋은데 이때 pandas를 활용한다.

-

read_csv(경로): csv데이터를 불러들인다.

csv파일의 데이터를 pandas를 통해 가져오기 -

groupby(컬럼): 특정 열을 값의 종류를 기준으로 그룹화할 수 있다.print(data_df.groupby('target').size()) #target #0 499 #1 526 #dtype: int64 -

drop(columns=?, axis=?): 특정 열을 없앤 결과를 반환한다. 이때 axis는 축으로 0이면 행이, 1이면 열이 drop된다.X = data_df.drop(columns="target", axis=1) y = data_df["target"] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=2) print(X_train.shape, X_test.shape) print(y_train.shape, y_test.shape) # (768, 13) (257, 13) # (768,) (257,) rf_cls = RandomForestClassifier() rf_cls.fit(X_train, y_train) print(accuracy_score(rf_cls.predict(X_test), y_test)) print(confusion_matrix(y_test), rf_cls.predict(X_test))RandomForestClassifier는 분류모델이라 fit, predict가 이전에 했던 것과 동일하게 할 수 있는걸 확인할 수 있다.

학습시킬 모델들

- 결정 트리: 결정사항들을 가지고 이진트리 형태로 계속 갈림길에서 나뉘는 형태이다.